Everywhere I go to present MySQL InnoDB Cluster, during the demo of creating a cluster, many people doesn’t understand why when I’ve 2 members, my cluster is not yet tolerant to any failure.

Indeed when you create a MySQL InnoDB Cluster, as soon as you have added your second instance, you can see in the status:

"status": "OK_NO_TOLERANCE",

"statusText": "Cluster is NOT tolerant to any failures.",

Quorum

Why is that ? It’s because, to be part of primary partition (the partition that holds the service, the one having a Primary-Master in Single Primary Mode, the default mode), your partition must reach the majority of nodes (quorum). In MySQL InnoDB Cluster (and many other cluster solutions), to achieve quorum, the amount of members in a partition must be > (bigger) than 50%.

So when we have 2 nodes, if there is a network issue between the two servers, the cluster will be split in 2 partitions. And each of it will have 50% of the amount of total members (1 of 2). Is 50% > than 50% ?? No! That’s why none of the partition will reach quorum and none will allow queries in case of MySQL InnoDB Cluster.

Indeed, the first machine will see that it won’t be able to reach the second machine anymore… but why ? Is the second machine who died ? Am I having network interface issues ? We don’t know, so we cannot decide.

Let’s take a look at this cluster of 3 members (3/3 = 100%):

If we take a look in the cluster.status()output, we can see that with 3 nodes we can tolerate one failure:

"status": "OK",

"statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

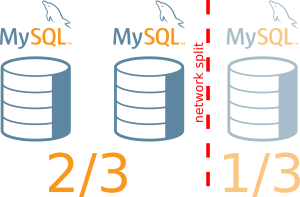

Now let’s imagine we have a network issue that will isolate one of the members:

We can see in cluster.status()output that the node is missing:

Our cluster will still be able to serve transactions has one partition still has quorum (2/3 = 66%, which is bigger than 50%).

"mysql6:3306": {

"address": "mysql6:3306",

"mode": "n/a",

"readReplicas": {},

"role": "HA",

"status": "(MISSING)"

}

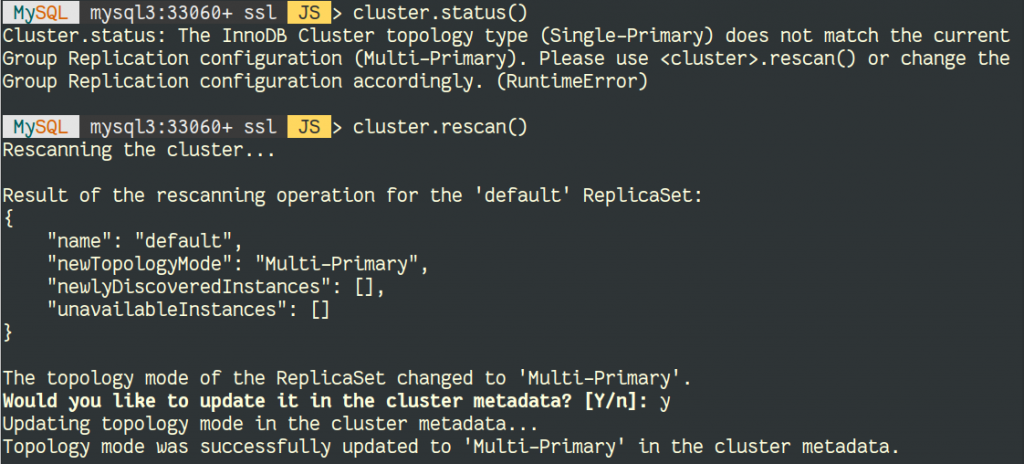

There is a very important concept I want to cover as this is not always obvious. The cluster is different in InnoDB Cluster and in Group Replication. Indeed, InnoDB Cluster relies on metadata created by the DBA using the MySQL Shell. Those metadata describes how the cluster has been set up. Group Replication sees the cluster differently. It sees it as it was last time it checked and how it’s right now… and updates that view. This is commonly called, the view of the world.

So in the example above, InnoDB Cluster sees 3 nodes: 2 online and 1 missing. For Group Replication, for a short moment, the partitioned node was UNREACHABLE and few second later, after being ejected from the Group by the majority (so only if there is still a majority), the node is not part of the cluster anymore. The Group size is now 2 of 2 (2/2 not 2/3). This information is exposed via performance_schema.replication_group_members

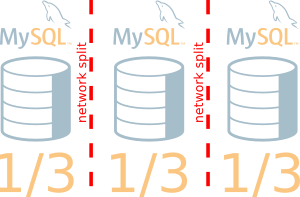

If our network issue would have been more serious and would split our cluster in 3 like the picture below, the cluster would be “offline” as none of the 3 partition would have reached quorum majority, 1/3 = 33% (<50%):

In this case the MySQL service won’t work properly until a human fixes the situation.

Fixing the situation

When there is no more primary partition in the cluster (like the example above), the DBA needs to restore the service. And as usual, there is already some information in the MySQL error log:

2019-04-10T13:34:09.051391Z 0 [Warning] [MY-011493] [Repl] Plugin group_replication

reported: 'Member with address mysql4:3306 has become unreachable.'

2019-04-10T13:34:09.065598Z 0 [Warning] [MY-011493] [Repl] Plugin group_replication

reported: 'Member with address mysql5:3306 has become unreachable.'

2019-04-10T13:34:09.065615Z 0 [ERROR] [MY-011495] [Repl] Plugin group_replication

reported: 'This server is not able to reach a majority of members in

the group. This server will now block all updates. The server will

remain blocked until contact with the majority is restored. It is

possible to use group_replication_force_members to force a new group

membership.

From the message, we can see that this is exactly the situation we are explaining here. We can see in cluster.status()that the cluster is “blocked” :

"status": "NO_QUORUM",

"statusText": "Cluster has no quorum as visible from 'mysql4:3306'

and cannot process write transactions.

2 members are not active",

We have two solutions to fix the problem:

- using SQL and Group Replication variables

- using the MySQL Shell’s adminAPI

Fixing using SQL and Group Replication variables

This process is explained in the manual (Group Replication: Network Partitioning).

On the node the DBA wants to use to restore the service, if there is only one node left we can use the global variable group_replication_force_members and use the GCS address of the server that you can find in group_replication_local_address(if there are multiple servers online but not reaching the majority, all should be added to this variable):

SQL set global group_replication_force_members=@@group_replication_local_address;

Be careful that the best practice is to shutdown the other nodes to avoid any kind of conflicts if they reappear during the process of forcing quorum.

And the cluster will be again available. We can see in the error log that the situation has been resolved:

2019-04-10T14:41:15.232078Z 0 [Warning] [MY-011498] [Repl] Plugin group_replication

reported:

'The member has resumed contact with a majority of the members in the group.

Regular operation is restored and transactions are unblocked.'

Don’t forget to remove the value of group_replication_force_members when you are back online:

SQL set global group_replication_force_members='';

When the network issue are resolved, the nodes will try to reconnect but has we forced the membership, those nodes will be rejected. You will need to rejoin the Group by:

- restarting mysqld

- or restarting again group replication (

stop group_replication; start group_replication) - or using MySQL Shell (

cluster.rejoinInstance())

Using the MySQL Shell’s adminAPI

The other option is to use the adminAPI from the MySQL Shell. This is the preferable option of course ! With the AdminAPI you don’t even need to know the port used for GCS to restore the quorum.

In the example below, we will use the server called mysql4 to re-activate our cluster:

JS cluster.forceQuorumUsingPartitionOf('clusteradmin@mysql4')

And when the network issues are resolved, the Shell can also be used to rejoin other instances (in this case mysql6) :

JS cluster.rejoinInstance('clusteradmin@mysql6')

Conclusion

When for any reason you have lost quorum on your MySQL InnoDB Cluster, don’t panic. Choose the node (or list of nodes that can still communicate with each others) you want to use and if possible shutdown or stop mysqld on the other ones. Then MySQL Shell is again your friend and use the adminAPI to force the quorum and reactive your cluster in one single command !

Bonus

If you want to know if your MySQL server is part of the primary partition (the one having the majority), you can run this command:

mysql> SELECT IF( MEMBER_STATE='ONLINE' AND ((

SELECT COUNT() FROM performance_schema.replication_group_members

WHERE MEMBER_STATE NOT IN ('ONLINE', 'RECOVERING')) >=

((SELECT COUNT()

FROM performance_schema.replication_group_members)/2) = 0), 'YES', 'NO' )

in primary partition

FROM performance_schema.replication_group_members

JOIN performance_schema.replication_group_member_stats

USING(member_id) where member_id=@@global.server_uuid;

+----------------------+

| in primary partition |

+----------------------+

| NO |

+----------------------+

Or by using this addition to sys schema: addition_to_sys_GR.sql

SQL select gr_member_in_primary_partition();

+----------------------------------+

| gr_member_in_primary_partition() |

+----------------------------------+

| YES |

+----------------------------------+

1 row in set (0.0288 sec)